Sri Rampai, Wangsa Maju

Kuala Lumpur, Malaysia

adyaakob@gmail.com

+60 102369037

Sri Rampai, Wangsa Maju

Kuala Lumpur, Malaysia

adyaakob@gmail.com

+60 102369037

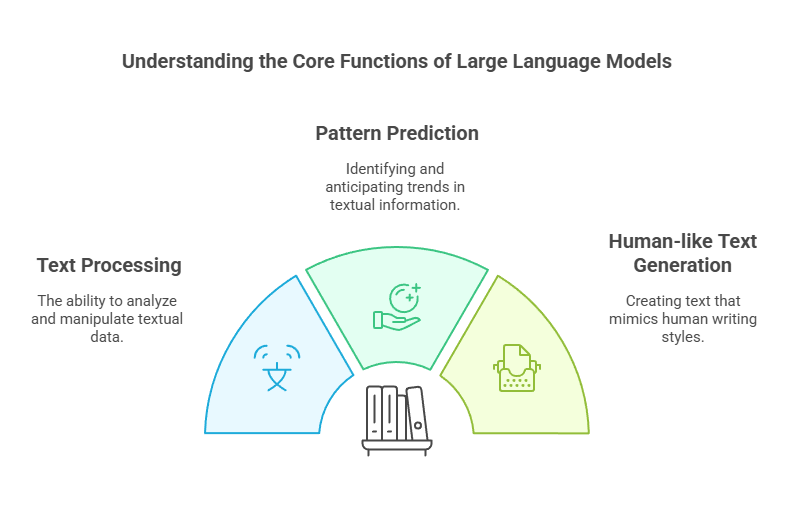

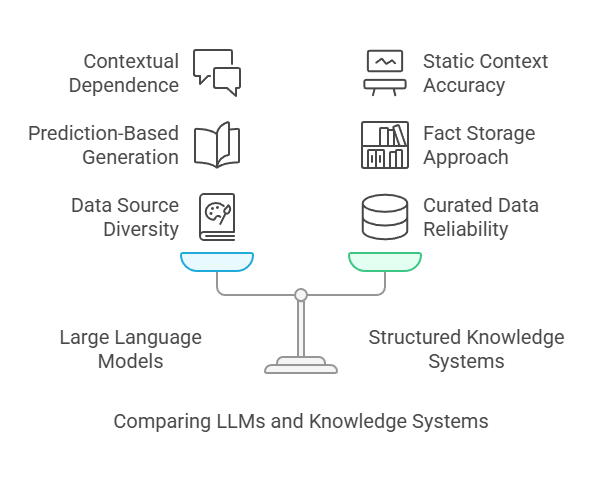

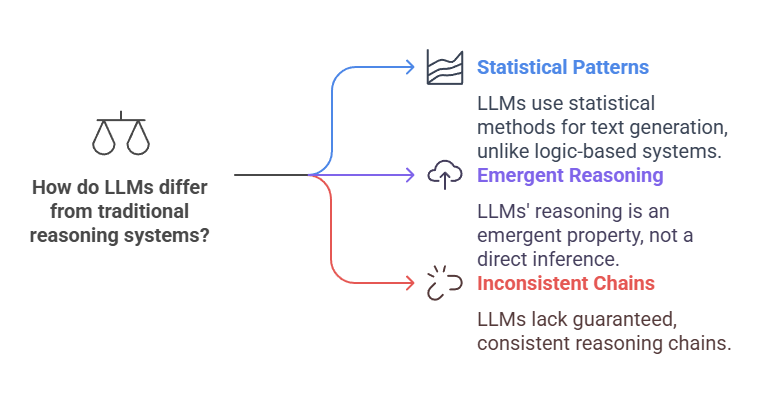

Large Language Models (LLMs) are tools designed to work with language. They are called “language models” because their main job is to understand and generate text based on patterns they’ve learned from lots of written data, like books and websites. They don’t actually “know” things like a database or “think” like a person. Instead, they predict what text is likely to come next. This makes them great for creating and interpreting text but not for being perfectly accurate or logical. Their strength lies in language, not in storing facts or solving problems like a human brain.

Large Language Models (LLMs) are a category of artificial intelligence (AI) systems designed to process and generate human-like text. The emphasis on “language” is deliberate. While these models can appear to have deep knowledge or reasoning ability, their core function is to understand and predict patterns in textual data.

LLMs are specifically termed “Large Language Models” because their main strength is in processing and generating text. They do not inherently serve as robust knowledge repositories or reliable reasoning engines. Their core skill lies in recognizing and reproducing linguistic patterns from extensive training data, which can be incredibly powerful but must not be mistaken for true knowledge or logical proof.